Techcrunch reports on the launch of Attributor, a startup company that monitors copyright infringement on the web. The timing is interesting: just now there is a mild uproar in some blog circles in the Netherlands about Cozzmozz. Where Attributor only monitors, and leaves it to the copyrightholders to decide whether and which action to take, this company takes it a significant step further: they also takes legal action on behalf of the authors (in exchange for a nice cut of course).

This blog quoted a short article in full. Dutch copyright law allows for a kind of 'fair use', which is vaguely formulated though, making this a borderline case. The full article was quoted, a no-no, but it was so short it would have been difficult to leave out something in the context of the argument made against the stance of the interview. This took place a while ago. Then out of the blue, several days ago Cozzmozz threatened to sue but offered a deal, first 240 then 160 euro instead of 600, which the blogger in case chose to pay. Though offers of support were flooding in, as an ME patient, her energy is limited and she decided that she could not afford a long story.

The real danger here is that when copyright holders transfer their rights to outifits, which exploit them in exchange for a cut, the grey area between the legal and the moral right disappears. Would the freelance journalist who wrote the article have chosen to sue this blogger herself?

What's legally right may not differ from what's morally right. Yet another reason to clear up copyright laws.

(edited 6/12, 20.20 - made the difference between attributor and cozzmozz clearer).

Tuesday, November 06, 2007

Monday, October 15, 2007

RFID for libraries: HF or UHF? (1)

Using RFID tags in libraries has become common nowadays, at least for public libraries. The high circulation rates make the math simple: introducing self-service for patrons increases the efficiency significantly enough to pay back.

For research libraries with a much lower circulation rate, this advantage is not that great, and as a result, RFID adoption is trailing. But, as a famous dutch philosopher would say, every disadvantage has its advantage (and vice versa...).

HF: the de facto standard

There are several types of RFID, each operating in a different wave spectrum. The most important ones are HF and UHF, operating respectively the High and the Ultra High Frequency bands. Though the basic technique is the same, the different wavelengths make quite a difference in the details.

The de facto standard for library RFID use is to use HF-based techniques. This can be considered proven tech these days, and there is a healthy marketplace with numerous vendors offering systems. This is historical: when libraries started adopting RFID, HF was the more mature technology, and it was foreseen that its shortcomings for inventory would be solved in the future. This, however, has not happened. HF RFID works well for patron self-service, but is still not reliable enough for inventory.

For research libraries, the advantage of introducing RFID are much less in the area of self-service, and much more in warehousing. Research libraries keep their books for a much longer time, if not indefinitely, and have large closed stack warehouses. This is where it gets interesting.

UHF vs HF

In recent years, UHF technology has also matured, and has become the RFID type of choice for industries where tracking and tracing is the most important goal. All the big name-projects use UHF: Wal-Mart, Metro, Marks & Spencers', as well as numerous other ones. UHF can scan hundreds of objects per second, and more importantly, it can do it reliably. And here we come to the big difference: the way read failures are handled. With HF, failures are caused by tags too close to each other, parallel, to the shelf or a wall. These tags are effectively skipped.

UHF also has its share errors. However, the tags are much less prone to distortion caused by being placed too close together; and also the reading speed makes the scanner effectively retry reading a difficult tags numerous times. The main reading errors of UHF are caused by field distortion. Certain types of materials and shapes can work as a conduit, effectively extending the field in which the reader operates in a seemingly random direction. When that happens, the reader will also pick up tags from a number of meters away, rather than just the ones close by.

This causes its share of problems - but they are of a different category. The importance is that where HF fails with silent reading errors, UHF fails by reading too many. Reading tags that turn out to be several shelves away are a problem when looking for a specific misplaced book. However, silent errors are deadly when taking inventory. When you have too many results, you can try to filter the unwanted ones out; when you have too few, there's nothing you can do.

UHF has the promise to deliver. Yet HF is the proven technology. What to do?

To be continued...

For research libraries with a much lower circulation rate, this advantage is not that great, and as a result, RFID adoption is trailing. But, as a famous dutch philosopher would say, every disadvantage has its advantage (and vice versa...).

HF: the de facto standard

There are several types of RFID, each operating in a different wave spectrum. The most important ones are HF and UHF, operating respectively the High and the Ultra High Frequency bands. Though the basic technique is the same, the different wavelengths make quite a difference in the details.

The de facto standard for library RFID use is to use HF-based techniques. This can be considered proven tech these days, and there is a healthy marketplace with numerous vendors offering systems. This is historical: when libraries started adopting RFID, HF was the more mature technology, and it was foreseen that its shortcomings for inventory would be solved in the future. This, however, has not happened. HF RFID works well for patron self-service, but is still not reliable enough for inventory.

For research libraries, the advantage of introducing RFID are much less in the area of self-service, and much more in warehousing. Research libraries keep their books for a much longer time, if not indefinitely, and have large closed stack warehouses. This is where it gets interesting.

UHF vs HF

In recent years, UHF technology has also matured, and has become the RFID type of choice for industries where tracking and tracing is the most important goal. All the big name-projects use UHF: Wal-Mart, Metro, Marks & Spencers', as well as numerous other ones. UHF can scan hundreds of objects per second, and more importantly, it can do it reliably. And here we come to the big difference: the way read failures are handled. With HF, failures are caused by tags too close to each other, parallel, to the shelf or a wall. These tags are effectively skipped.

UHF also has its share errors. However, the tags are much less prone to distortion caused by being placed too close together; and also the reading speed makes the scanner effectively retry reading a difficult tags numerous times. The main reading errors of UHF are caused by field distortion. Certain types of materials and shapes can work as a conduit, effectively extending the field in which the reader operates in a seemingly random direction. When that happens, the reader will also pick up tags from a number of meters away, rather than just the ones close by.

This causes its share of problems - but they are of a different category. The importance is that where HF fails with silent reading errors, UHF fails by reading too many. Reading tags that turn out to be several shelves away are a problem when looking for a specific misplaced book. However, silent errors are deadly when taking inventory. When you have too many results, you can try to filter the unwanted ones out; when you have too few, there's nothing you can do.

UHF has the promise to deliver. Yet HF is the proven technology. What to do?

To be continued...

Labels:

RFID,

technology,

UHF

Thursday, May 31, 2007

Social Academic referencing: a trial

At the Library of the University of Amsterdam, we've done a small trial to investigate the merits of using a 'social referencing' service. In the trial, scientists from a specific research group used such a service for several months. With a panel discussion before and after, and logs during, we hoped to get some measurable results on the impact on their work of this. Not all went as we hoped, but the resulting report (url below) offers some useful insight in what this group of scientists found useful.

I'll highlight two points that may be of wider interest. First of all, our test group felt strongly about privacy. They liked to not just add cites to a system, and tag, rate or even comment them heavily for themselves. For sharing however, it was felt essential to have control over who could see what. Rating an article negatively could be helpful for direct peers, but who knows whether the author might decide over a grant in future? The participants wanted a clear, fine-grained control. Sometimes it would be fine to share the citation, but to limit the rating or tags to a certain group; some comments are meant private, some are for the whole world; and so on. Because of this, they voted to use BibSonomy at the start of the trial.

However, it turns out that ease of use is even more important than features, and the group considered the system not easy enough. To save the project, we switched to Citeulike, and extended the use period.

I'm curious what others think of this demand for detailed control over privacy-settings. In researching next generation research collaboratories, again I found scientists consider it paramount to be able to set the privacy level for each item themselves. Not through a helpdesk, ticketqueue and a sysadmin - themselves. The two main contenders to build such systems on top of, Sakai and Sharepoint, provide such a detailed rights-structure out of the box. So it's not pie-in-the-sky thinking: it is already out there.

The project page

Download report (pdf)

BTW: yes, we're working on a proper publication.

I'll highlight two points that may be of wider interest. First of all, our test group felt strongly about privacy. They liked to not just add cites to a system, and tag, rate or even comment them heavily for themselves. For sharing however, it was felt essential to have control over who could see what. Rating an article negatively could be helpful for direct peers, but who knows whether the author might decide over a grant in future? The participants wanted a clear, fine-grained control. Sometimes it would be fine to share the citation, but to limit the rating or tags to a certain group; some comments are meant private, some are for the whole world; and so on. Because of this, they voted to use BibSonomy at the start of the trial.

However, it turns out that ease of use is even more important than features, and the group considered the system not easy enough. To save the project, we switched to Citeulike, and extended the use period.

I'm curious what others think of this demand for detailed control over privacy-settings. In researching next generation research collaboratories, again I found scientists consider it paramount to be able to set the privacy level for each item themselves. Not through a helpdesk, ticketqueue and a sysadmin - themselves. The two main contenders to build such systems on top of, Sakai and Sharepoint, provide such a detailed rights-structure out of the box. So it's not pie-in-the-sky thinking: it is already out there.

The project page

Download report (pdf)

BTW: yes, we're working on a proper publication.

Labels:

citeulike,

library2.0,

social networking

Linking social networks

Thinking further about social networks and the need to link them, I rememberd the classic post from Jason Kottke: Being your friend is hard. This was written in the heyday of the first generation of general social networking sites, before a clear winner had arrived - at least for specific areas, such as geographic (bebo for the UK, hyves in .nl), thematic (facebook) or generational (myspace).

The fact that these 'winners' have indeed arrived is an indication that users flock to communities that are large enough - with large enough being pretty damn big. Too big for individual libraries, that's for sure.

Maybe Aquabrowsers' interoperability might be good enough so that it becomes a de facto standard, like RSS became. For this to work, they need to solve the problem of unique identifiers for any item in the system, whether ILS item, internal digital asset or digital from an external source. Ideally, this would be the first step towards a generic DOI for objects.

Of course, let's hope it won't be like the tangled mess that RSS became. As the library community, we'd better keep an eye out! Interesting times, interesting times.

The fact that these 'winners' have indeed arrived is an indication that users flock to communities that are large enough - with large enough being pretty damn big. Too big for individual libraries, that's for sure.

Maybe Aquabrowsers' interoperability might be good enough so that it becomes a de facto standard, like RSS became. For this to work, they need to solve the problem of unique identifiers for any item in the system, whether ILS item, internal digital asset or digital from an external source. Ideally, this would be the first step towards a generic DOI for objects.

Of course, let's hope it won't be like the tangled mess that RSS became. As the library community, we'd better keep an eye out! Interesting times, interesting times.

Labels:

interoperability,

library2.0

Monday, May 28, 2007

Notes on the Stroomt Library 2.0 workshop

Stroomt, a company specializing in 'information optimizing', organised a Library 2.0 Theme day (though actually an afternoon) this Friday.

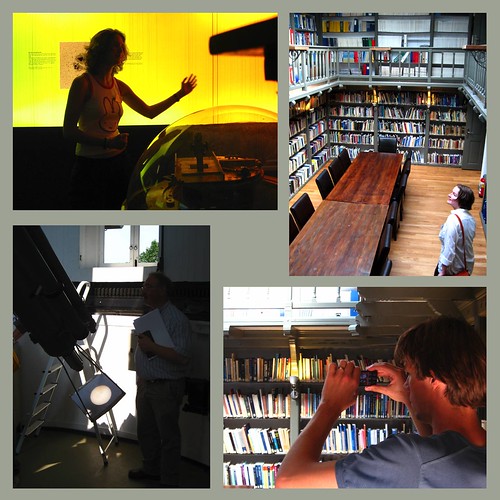

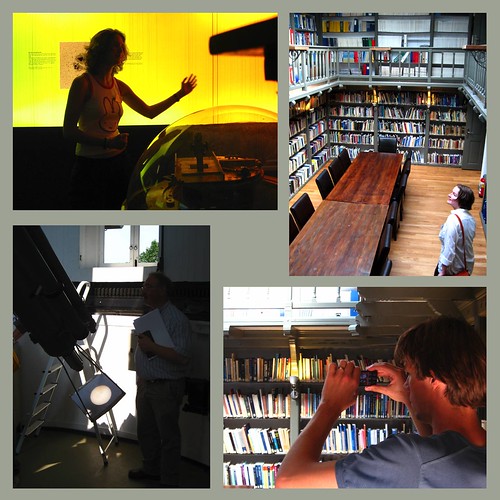

A small impression first. I only took my camera out at the guided tour of the museum where the afternoon was held, at which the attendees expressed polite interest - until the tour finished at the old library room of the observatory, and the ooh-ing and aah!-ing commenced...

On to business. A theme of the talks, and the discussion, was how to make social networks work. Folksonomies depend heavily on scale: the more participants a network has, the better. But in reality, networks are isolar, divided, split... For example, you could leave a comment on this picture both here, on the blog, or on its flickr page. Two different networks that do not connect.

Kudos for Moqub for full transcripts. These are a few remarks that piqued my interest.

Liesbeth Mantel (Moqub):

Observation: 75% of internet users does not know the term web 2.0 - but uses it all the time (leaving comments, using ratings and 'trust' on eBay, etc.)

Example of a (public) library that has embraced content: Gail Library

I somehow missed Librarything for libraries - that's cool! Even though it still is an insolar community (only libraries), it packs more users than a single library has patrons.

Menno Rasch (UU):

As port of portal strategy, they actually have a working portlet for the catalogue, showing books ready to be picked up, and books already borrowed with a renew link! It should be nothing special but no way we could build this with our ILS... (yes, our vendor is a four-letter word). Good for them that they chose Aleph.

And yes, they get it: the portlet can be integrated in other portals, *outside* the uni's domain, such as netvibes! Way to go.

But alas, they don't get it as far as user-contributed content for Omega is concerned - they're very hesitant in adding that. Pity. They doubt if the 25k users would be enough to make it useful - and 'long tail' does not count as such.

Alexander Blanc (SURFnet):

Rather specialised talk on the Surfnet video portal and their plans for that. Much still unclear. Much relies on material from the (rather expensive) academia license. They would like to become a youtube, but it'll be an organisational challange as the whole model right now is based on institutional use. Also no plans for supporting CC-licensing yet, though he's not ruling it out either.

Taco Ekkel (Aquabrowser):

Aquabrowser has 250 library installations worldwide. Their idea: add usercontributed metadata to these and link them. That's smart. They promise to do it using open protocols for exchange of such data, that alas have still to be developed. There'll be an open API though. If that works out, it'd be terrific.

Interesting approach: no special tools for librarians - they can make lists, rate items and all that jazz, but librarians' accounts are in principle no different from patrons'. Wonder if that flies with the professionals, might be a hard sell to let go of the specialness. Hope it works - I'm sure the pros will still come floating on top, simply by the quantity and quality of their contributions, but that way the 'trust' those accounts build up will be based on actual accomplishments.

The charts of Aquabrowsers' internals look a lot like Primo's. They hint at full support for integrating federated search sources. Unfortunately, nothing's even planned for bringing all the Library2.0-goodies that the internal sources get to these. Technically I understand, but how am I going to explain this to the average researcher? And why?

Finally...

Interesting day. Good to see that Library2.0 is very much alive in the dutch library community. Also good to see that this is only the beginning. We've got a long road ahead - thinking on linking social networks together is only just starting out, and collaboratories and new forms of use and re-use of information objects weren't even mentioned in the discussions. For which their was, BTW, not enough time - the biggest drawback of this afternoon.

Famous last words

impromptu business cards - another use for MOO cards!

A small impression first. I only took my camera out at the guided tour of the museum where the afternoon was held, at which the attendees expressed polite interest - until the tour finished at the old library room of the observatory, and the ooh-ing and aah!-ing commenced...

On to business. A theme of the talks, and the discussion, was how to make social networks work. Folksonomies depend heavily on scale: the more participants a network has, the better. But in reality, networks are isolar, divided, split... For example, you could leave a comment on this picture both here, on the blog, or on its flickr page. Two different networks that do not connect.

Kudos for Moqub for full transcripts. These are a few remarks that piqued my interest.

Liesbeth Mantel (Moqub):

Observation: 75% of internet users does not know the term web 2.0 - but uses it all the time (leaving comments, using ratings and 'trust' on eBay, etc.)

Example of a (public) library that has embraced content: Gail Library

I somehow missed Librarything for libraries - that's cool! Even though it still is an insolar community (only libraries), it packs more users than a single library has patrons.

Menno Rasch (UU):

As port of portal strategy, they actually have a working portlet for the catalogue, showing books ready to be picked up, and books already borrowed with a renew link! It should be nothing special but no way we could build this with our ILS... (yes, our vendor is a four-letter word). Good for them that they chose Aleph.

And yes, they get it: the portlet can be integrated in other portals, *outside* the uni's domain, such as netvibes! Way to go.

But alas, they don't get it as far as user-contributed content for Omega is concerned - they're very hesitant in adding that. Pity. They doubt if the 25k users would be enough to make it useful - and 'long tail' does not count as such.

Alexander Blanc (SURFnet):

Rather specialised talk on the Surfnet video portal and their plans for that. Much still unclear. Much relies on material from the (rather expensive) academia license. They would like to become a youtube, but it'll be an organisational challange as the whole model right now is based on institutional use. Also no plans for supporting CC-licensing yet, though he's not ruling it out either.

Taco Ekkel (Aquabrowser):

Aquabrowser has 250 library installations worldwide. Their idea: add usercontributed metadata to these and link them. That's smart. They promise to do it using open protocols for exchange of such data, that alas have still to be developed. There'll be an open API though. If that works out, it'd be terrific.

Interesting approach: no special tools for librarians - they can make lists, rate items and all that jazz, but librarians' accounts are in principle no different from patrons'. Wonder if that flies with the professionals, might be a hard sell to let go of the specialness. Hope it works - I'm sure the pros will still come floating on top, simply by the quantity and quality of their contributions, but that way the 'trust' those accounts build up will be based on actual accomplishments.

The charts of Aquabrowsers' internals look a lot like Primo's. They hint at full support for integrating federated search sources. Unfortunately, nothing's even planned for bringing all the Library2.0-goodies that the internal sources get to these. Technically I understand, but how am I going to explain this to the average researcher? And why?

Finally...

Interesting day. Good to see that Library2.0 is very much alive in the dutch library community. Also good to see that this is only the beginning. We've got a long road ahead - thinking on linking social networks together is only just starting out, and collaboratories and new forms of use and re-use of information objects weren't even mentioned in the discussions. For which their was, BTW, not enough time - the biggest drawback of this afternoon.

Famous last words

impromptu business cards - another use for MOO cards!

Labels:

events,

library2.0

Friday, March 09, 2007

A workshop on library 2.0: the next step

In december, Marco Streefkerk and yours truly organised a workshop on Library 2.0. What started as a small meeting, then grew into quite a gathering, with participants from three Dutch universities, two overview lectures on the challenges that we've come to call Library 2.0, and three possible scenario's to get there.

Certainly an interesting afternoon, with much food for thought. Therefore I'm glad that most presentations are now available online:

http://www.uba.uva.nl/library-2.0-workshop

Certainly an interesting afternoon, with much food for thought. Therefore I'm glad that most presentations are now available online:

http://www.uba.uva.nl/library-2.0-workshop

Labels:

events,

library2.0,

primo

Wednesday, February 14, 2007

Kottke on the popularity of Flickr vs. Fotolog

Jason Kottke, always one to watch, wrote an insightful post on the relativity of the popularity of one of web2.0's poster children, flickr.

The second point he makes has something in store for research librarians:

(on a sidenote, flickr has some serious i18n work to do if their marketshare is so low outside the comfortable-with-english world)

Related to this: social networks need traction from an active community to flourish. They need numbers. That's why I think the use of del.icio.us for the Avian Influenza Resources-page from Umich's health library is perfect. For everyone: the library, they didn't need to build something themselves; the users of the library, who gained a good resource; and the wider community of del.icio.us users interested in the topic, since the work the librarians did helps improve the general body of information in the whole system. I have much respect for my colleagus who built the brand new HvA web resources site, but it's no match.

As libraries, we cannot compete. We shouldn't. On the contrary, we should embrace cooperation, as we should embrace change.

The second point he makes has something in store for research librarians:

2. Flickr is more editorially controlled than Fotolog. The folks who run Flickr subtly and indirectly discourage poor quality photo contributions. Yes, upload your photos, but make them good. And the community reinforces that constraint to the point where it might seem restricting to some. Fotolog doesn't celebrate excellence like that...it's more about the social aspect than the photos.In other words, not all communities are equal. As academic libraries, we cannot and should not aim to build communities of maximum size. This is where our long tradition of quality over quantity comes in. If that means, for example citeulike won't be as popular as del.icio.us, so be it. They can both exist.

(on a sidenote, flickr has some serious i18n work to do if their marketshare is so low outside the comfortable-with-english world)

Related to this: social networks need traction from an active community to flourish. They need numbers. That's why I think the use of del.icio.us for the Avian Influenza Resources-page from Umich's health library is perfect. For everyone: the library, they didn't need to build something themselves; the users of the library, who gained a good resource; and the wider community of del.icio.us users interested in the topic, since the work the librarians did helps improve the general body of information in the whole system. I have much respect for my colleagus who built the brand new HvA web resources site, but it's no match.

As libraries, we cannot compete. We shouldn't. On the contrary, we should embrace cooperation, as we should embrace change.

Thursday, February 08, 2007

EU public access petition

Of course, everybody has already signed the Petition for guaranteed public access to publicly-funded research results, right?

But just in case you haven't yet, please do it now. Thanks!

But just in case you haven't yet, please do it now. Thanks!

Subscribe to:

Comments (Atom)